1. Spending $ on brain training isn’t so smart. It seems impossible to listen to NPR without hearing from their sponsor, Lumosity, the brain-training company. The target demo is spot on: NPR will be the first to tell you its listeners are the “nation’s best and brightest”. And bright people don’t want to slow down. […]

1. Open Innovation can up your game. Open Innovation → Better Evidence. Scientists with an agricultural company tell a fascinating story about open innovation success. Improving Analytics Capabilities Through Crowdsourcing (Sloan Review) describes a years-long effort to tap into expertise outside the organization. Over eight years, Syngenta used open-innovation platforms to develop a dozen data-analytics […]

1. Free beer for a year for anyone who can work perfume, velvety voice, and ‘Q1 revenue goals were met’ into an appropriate C-Suite presentation. Prezi is a very nice tool enabling you to structure a visual story, without forcing a linear, slide-by-slide presentation format. The best part is you can center an entire talk […]

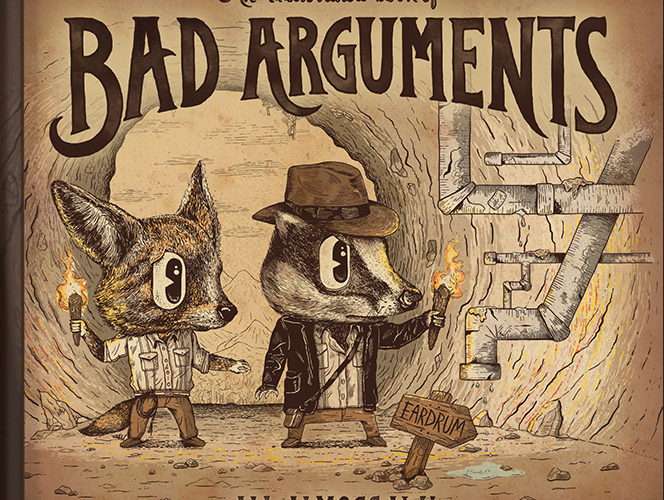

1. Bad logic → Bad arguments → Bad decisions The Book of Bad Arguments is a simple explanation of common logical flaws / barriers to successful, evidence-based decisions. This beautifully illustrated work by Ali Almossawi (@AliAlmossawi) should be on everyone’s bookshelf. Now available in several languages. 2. Home visits for children → Lifelong benefits → […]

1. Mistakes we make when sharing insights. We’ve all done this: Hurried to share valuable, new information and neglected to frame it meaningfully, thus slowing the impact and possibly alienating our audience. Michael Shrage describes a perfect example, taken from The Only Rule Is It Has to Work, a fantastic book about analytics innovation. The […]

1. Poor kids already have grit: Educational Controversy, 2016 edition. All too often, we run with a sophisticated, research-based idea, oversimplify it, and run it into the ground. 2016 seems to be the year for grit. Jean Rhodes, who heads up the Chronicle of Evidence-Based Mentoring (@UMBmentoring) explains that grit is not a panacea for […]

1. Do we judge women’s decisions differently? Cognitive psychologist Therese Huston’s book is How Women Decide: What’s True, What’s Not, and What Strategies Spark the Best Choices. It may sound unscientific to suggest there’s a particular way that several billion people make decisions, but the author doesn’t seem nonchalant about drawing specific conclusions. The book […]

1. Magical thinking about ev-gen. Rachel E. Sherman, M.D., M.P.H., and Robert M. Califf, M.D. of the US FDA have described what is needed to develop an evidence generation system – and must be playing a really long game. “The result? Researchers will be able to distill the data into actionable evidence that can ultimately […]

Three Ways of Getting to Evidence-Based Policy. In the Stanford Social Innovation Review, Bernadette Wright (@MeaningflEvdenc) does a nice job of describing three ideologies for gathering evidence to inform policy. Randomista: Views randomized experiments and quasi-experimental research designs as the only reliable evidence for choosing programs. Explainista: Believes useful evidence needs to provide trustworthy data […]

1. Human fallibility → Debiasing techniques → Better science Don’t miss Regina Nuzzo’s fantastic analysis in Nature: How scientists trick themselves, and how they can stop. @ReginaNuzzo explains why people are masters of self-deception, and how cognitive biases interfere with rigorous findings. Making things worse are a flawed science publishing process and “performance enhancing” statistical […]